We have practically given up on analogue media over the past decade. Paper continues to make a stand, but has practically surrendered: sales of digital media by the world’s largest bookstore, Amazon, have finally surpassed sales of physical objects. There’s no turning back.

It’s nice to think that we’re living in unprecedented times, but there’s no such thing as a lack of precedence. The first centuries of our era also witnessed the replacement of one media with another. The scroll, the basic carrier of information, was replaced by the codex, or a stack of pages bound on one side and stitched to a cover.

The rise of the codex as the dominant medium was not without its costs. We lost most of our cultural legacy in the first centuries A.D., as only a small number of scrolls were copied into their new form. Whatever didn’t strike the scribes as particularly relevant was irreversibly lost.

Now the codex is becoming increasingly antiquated and is being replaced by material in electronic form. The shift involves non-trivial problems. Information in analogue form requires no special tools in order to be read. And today? Good luck finding a way to read text files saved on a computer twenty years ago. Simply locating a working 5.25” floppy disk drive would be a miracle in itself. Hence the enormous importance of standardised data formats. Without proper specifications and computer software, today’s files might be just as hard to read in a few decades as cuneiform is today.

Digitisation must be accompanied by a parallel development of digital “Rosetta stones”, otherwise our entire effort will have been in vain.

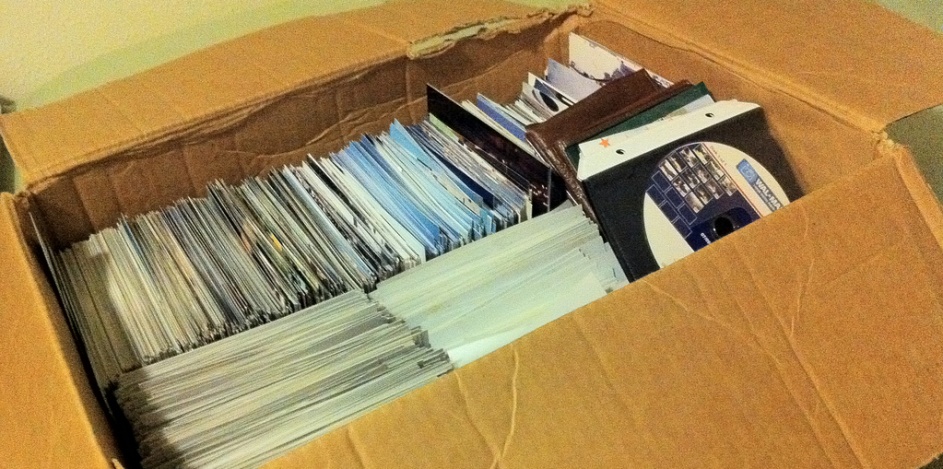

The sheer amount of information requiring urgent digitisation is a trap in itself. Modern digitisation programs focus on mass digitisation, in keeping with the principle of “the more, the better”. If we were to take a look at the evaluation criteria behind grant competitions for digitisation projects, we would see that the basic and dominant indicator is the number of scans performed. The only supplemental indicator is the number of users served by a given repository or digital library. Isn’t something missing here?

We’re forgetting about the issue of quality in digitisation. The number of scanned pages is obviously significant, but it tells us precious little about the usefulness this digitised content has to the reader. Is the text searchable? How many errors does the digitised material contain? What additional functionality has been implemented in the software? Is there some range of metadata entered into the system? Is the user able to export the content to the format of his or her choice? Is the material usable on mobile devices? Has the material been annotated, tagged, and commented in a manner that facilitates search and navigation? Has it been published in a form that is accessible to the disabled?

If we add legal issues associated with publishing copyright-restricted content, the obstacles begin to add up into a serious problem. Such criteria are conspicuously absent from programs created to fund digitisation efforts, with the exception of the commendable policy set forth by the National Audiovisual Institute, which requires grant recipients to digitise public domain resources or purchase rights to content. Yet the quality of the digitised content is fundamentally important to the end user, as it separates the resources that are actually used in practice from those that serve, at best, to stuff annual reports.

We may of course decide that the issue of digitisation quality is not relevant at this point. “Let’s just leave it for later.” In keeping with Stalin’s claim that “quantity has a quality all of its own”, we may decide that what we need is a maximum amount of information in raw, unprocessed form, and we’ll work on the editing and publishing at a later time. But that simply isn’t going to work in a world of fast-paced technological development. If we continue to conduct low-quality digitisation without investing in the tools and processes needed to properly edit and manage these resources, we will drop out of the race to the future. Cursory glances at the usability of such sites as Europeana and Google Books shows just how far ahead the leaders in the field of digitisation are.

Developing methods of rating the quality and innovativeness of digitisation processes will not be a simple task, but it is an effort that cannot be avoided. Without proper criteria and tools, we will just be producing massive quantities of scanned images, useful only to those who have no other choice.