Aiko and Kenji

People, who are interested in cyborgs, robots, androids and all the freaky futurist, technology-related ideas usually navigate the web in a search for updates on the progress in the field. And there they meet Aiko. She is clothed in a silicone body, weighs 30 kg, measures 152 cm and is a woman-android created by Canadian-Vietnamese designer Le Trung. Aiko speaks fluent English and Japanese and is very skillful at cleaning, washing windows and vacuuming. She reads books and distinguishes colours, knows how to learn and remember new things. What is more, when Le Trung tried to kiss her in public, she immediately hit him in the face. Undeterred by her violent behaviour, Le Trung talked about her as his wife. Sometimes however, he referred to her as his child or a project that he would leave behind for posterity.

Le Trung ensures that in a few years Aiko will become very much like a real woman. When this happens, he will win a life companion, and leave a treasure for the next generations: a fully humane female android. It all sounds very promising, but unfortunately there is one “but”. Aiko cannot walk. She moves in a wheelchair. She is incapable of doing one very simple thing that every mature and healthy human being can easily do. Why is that? Well, firts of all, Le Trung could not afford to finance software for walking. The main reason behind it however is that the current design of Aiko is not compatible with any good software that enables walking. If Le Trung wanted Aiko to walk, he would have to replace her with a new, better model. But then he would lose what he had achieved so far. Tough choice.

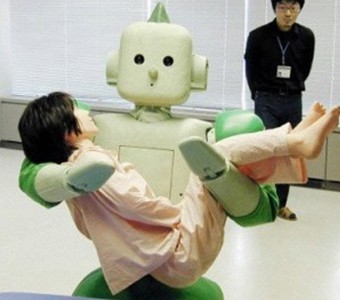

Looking for further examples, Kenji comes across. Kenji belongs to a group of robots geared with customised software, enabling emotional response to external stimuli. For Kenji’s creators the biggest success was Kenji’s devotion to the doll that he was kept with in one room for a longer time. When the doll was gone, Kenji immediately started asking where it was and when it would come back. He missed the doll. When it returned, he was hugging it all the time. Scientists were overwhelmed with this success: they had their first emotional machine! Thanks to the many weeks of iterated behaviour based on complex code Kenji equipped himself with something that can roughly be described as a feeling of tenderness. Carers enjoyed his progress until Kenji’s sensitivity began to be dangerous. At some point, Kenji started to demonstrate the level of commitment specific for a psychopath.

Kenji, source: http://uk.ign.comIt all started one day when a young student appeared at the lab. Her task was to test new procedures and software for Kenji. The girl regularly spent time with him. But on the day when her internship ended, Kenji protested in a rather blunt way: he didn’t let her leave the lab, and hugged her so hard with his hydraulic arms that she could not get out. Fortunately, the student managed to escape when two staff members came to rescue her and turned the robot off. After that event worried Dr. Takahashi – the main carer of Kenji – confessed that the enthusiasm of the research group was premature. What is even worse, since the incident with the girl, every time Kenji is activated, he reacts similarly towards the first encountered person. He immediately wants to hug the victim and articulates loud his love and affection thanks to 20-watt speaker he’s equipped with. However, Dr. Takahashi does not want to turn Kenji off. He believes that the day will come when, thanks to various improvements, less compulsive Kenji will be able to meet with people without frightening them.

Kenji, source: http://uk.ign.comIt all started one day when a young student appeared at the lab. Her task was to test new procedures and software for Kenji. The girl regularly spent time with him. But on the day when her internship ended, Kenji protested in a rather blunt way: he didn’t let her leave the lab, and hugged her so hard with his hydraulic arms that she could not get out. Fortunately, the student managed to escape when two staff members came to rescue her and turned the robot off. After that event worried Dr. Takahashi – the main carer of Kenji – confessed that the enthusiasm of the research group was premature. What is even worse, since the incident with the girl, every time Kenji is activated, he reacts similarly towards the first encountered person. He immediately wants to hug the victim and articulates loud his love and affection thanks to 20-watt speaker he’s equipped with. However, Dr. Takahashi does not want to turn Kenji off. He believes that the day will come when, thanks to various improvements, less compulsive Kenji will be able to meet with people without frightening them.

The so-called AI winter, or: “The vodka is good, but the meat is rotten”

Researchers of Artificial Intelligence (in short: AI) often do not show temperance in fostering society’s hope for robotic revolution. On the contrary – they usually announce that every new cyborg will change the world. The situation with the handicapped super-robot Aiko and hyper-emotional Kenji is characteristic of the whole field of AI. There is a good record of failed experiments, investment failures and successes that in the end where not successes at all.

On the other hand, there are very few research areas that faced so many eruptions interspersed with waves of enthusiasm and criticism, resulting in essential financial cuts. In the history of Artificial Intelligence there is a well known term called “AI winter”, meaning a period of reduced funding for research. The term was coined by analogy to the idea of nuclear winter. It first appeared in 1984 as a subject for public debate at the General Assembly of American Association of Artificial Intelligence. It provided a brief description of the emotional state of the research community centered on AI: the collapse of faith in the future of the field and increasingly difficult to conceal pessimism.

This mood was related to lack of success in machine translation. In the ’60s, during the Cold War, the U.S. government became interested in the automatic translation of Russian documents and scientific reports. In 1954 the U.S. launched a programme of support to build a translation machine. At first, the researchers were very optimistic. Noam Chomsky’s groundbreaking works on generative grammar have been harnessed to improve the process. But scientists were outgrown by the problem of ambiguity and contextuality of language.

Devoid of context, machine committed funny errors. Anecdotal example was a sentence first translated from Russian, and then back into Russian from English: “The spirit indeed is willing, but the flesh is weak” was translated by machine as: “The vodka is good, but the meat is rotten.” Similarly, “Out of sight, out of mind” has become: “The blind idiot”.

In 1964, the U.S. National Research Council (NRC), concerned with the lack of progress in AI, created the Advisory Committee on Automatic Language Processing (Alpaca) to take a closer look at the problem of translation. In 1966 The Committee came to the conclusion that machine translation is not only expensive, but also less accurate and slower than the work of man. Having familiarised itself with the conclusions of the Committee and after the release of approximately USD 20 million, the NRC refused further assistance. And this was only the beginning of problems.

Two major AI winters happened in the 1974-1980 and 1987-1993.

In the 60s Defense Advanced Research Projects Agency (DARPA) has spent millions of dollars on AI. The head of DARPA, JRC Licklider, believed deeply that one should invest in people, not in specific projects. Public money lavishly endowed leaders of AI: Marvin Minsky, John McCarthy, Herbert A. Simon and Allen Newell. It was a time when confidence in the development of Artificial Intelligence and its potential for the army were unwavering. Artificial Intelligence ruled everywhere, not only in the realm of economy, but also ideologically. Cybernetics has become a sort of new metaphysical paradigm.

It claimed that soon the ideal machine will surpass human intellectually, but it will still serve him and protect him. However, after nearly a decade of spending without limits that have not led to any breakthrough, the government became impatient. The Senate passed an amendment that required from DARPA direct funding of specific research projects. Researchers were supposed to demonstrate that their work will soon be beneficiary for the army. That has not happened. Study report conducted by DARPA was crushing.

DARPA was deeply disappointed with the results of scientists working on understanding speech in the methodological framework offered by Carnegie Mellon University. DARPA hoped for a system that could respond to remote voice commands. Although the research team has developed a system that could recognise English, it worked properly only when the words were spoken in a specific order. DARPA felt cheated and in 1974 recalled a three million U.S. dollars grant. Cuts in research funded by the government affected all academic centres in the United States. It was not until many years later that speech recognition tools based on Carnegie Melloon technology finally celebrated their success. The speech recognition market reached a value of 4,000,000,000 USD in 2001.

Lighthill report vs. fifth generation

Situation in the UK was pretty much similar. Decrease of funding for AI was a response to the so-called Lighthill report in 1973. Professor Sir James Lighthill was asked by Parliament to evaluate the development of AI in the UK. According to Lighthill, AI was unnecessary: other areas of knowledge were able to achieve better goals and needed more funding. A major problem was a kind of stubbornness of issues that AI struggled with. Lighthill noticed that many AI algorithms which were spectacular in theory turned to dust in the face of reality. Machine’s collision with the real world seemed to be unsolvable. The report led to the almost complete dismantling of AI research in England.

photo: Erik Charlton / Flickr CCThe revival of interest in Artificial Intelligence started only in 1983 when Alvey – was launched. It was a research project funded by the British government and worth 350 million pounds. Two years earlier, the Japanese Ministry of International Trade and Industry has allocated 850 million USD for the so-called fifth-generation computers. The aim was to write programmes and build machines that could carry on a conversation, translate easily into foreign languages, interpret photographs and paintings, in other words: achieve an almost human level of rationality. The British Alvey was a response to the project. However, until 1991, most of the of tasks foreseen for Alvey were not achieved. A large part of them still waiting, and it’s 2013. As with other AI projects, the expectation level was just too high.

photo: Erik Charlton / Flickr CCThe revival of interest in Artificial Intelligence started only in 1983 when Alvey – was launched. It was a research project funded by the British government and worth 350 million pounds. Two years earlier, the Japanese Ministry of International Trade and Industry has allocated 850 million USD for the so-called fifth-generation computers. The aim was to write programmes and build machines that could carry on a conversation, translate easily into foreign languages, interpret photographs and paintings, in other words: achieve an almost human level of rationality. The British Alvey was a response to the project. However, until 1991, most of the of tasks foreseen for Alvey were not achieved. A large part of them still waiting, and it’s 2013. As with other AI projects, the expectation level was just too high.

But let’s go back to 1983. DARPA again began to fund research in artificial intelligence. The long term goal was to establish the so-called strong Artificial Antelligence (strong AI), which – according to John Searle, who coined the term – was for a machine to become a man. By the way, it’s worth noting that both Aiko and Kenji (both from Japan) are trying to implement this very concept. In 1985, the U.S. government issued a one hundred million U.S. dollars check for 92 projects in sixty institutions – in the industry, universities and government labs. However, two leading AI researchers who survived the first AI winter, Roger Schank and Marvin Minsky, warned the government and business against excessive enthusiasm. They believed that the ambitions of AI got out of control and disappointment inevitably must be. Hans Moravec, a known researcher and Artificial Intelligence enthusiast, claimed that the crisis was caused by unrealistic predictions of his colleagues who kept on repeating the story of a bright future. Just three years later, AI billion industry started to decline.

A few projects have survived the funding cuts. He found himself among them the iDART – combat management system. It proved to be very successful, saved billions of dollars during the first Gulf War. It repaid the cost of many other less successful DARPA’s investments, soothe grievances and gave reasons for pragmatic policies of the agency. Such examples, however, were just few.

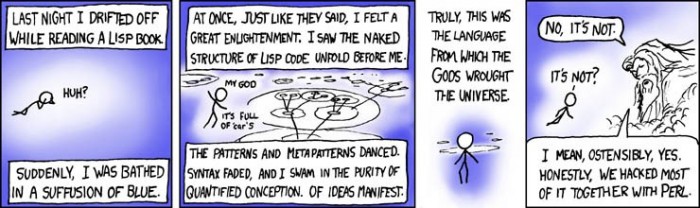

Great expectations and the Lisp machines

Lisp history is also significant for AI, but has a rather perverse conclusion. Lisp is a family of programming languages which proved to be essential to the development of artificial intelligence. In general, Lisp is the so-called higher-order language, which is capable of abstraction, and thus can use natural language, spoken by people. Lisp quickly became a popular programming language for artificial intelligence. It was used, for example in the implementation of the programming language, which was the basis for SHRDLU – groundbreaking computer programme designed by Terry Winograd that understood human speech.

When research on AI developed in the 70s, the performance of existing Lisp language systems has become a problem. Lisp as a language is quite sophisticated, and so it proved to be difficult to implement on hardware. This has led to the need for a so-called Lisp machines – computers optimised for processing Lisp. Today, such an action – creating machines to support a single language – would have been unthinkable! At that time though it was quite obvious. To keep up, both Lisp itself, as well as other companies such as Lucid and Franz, offered more and more powerful versions of the machines. However, progress in the field of computer hardware and compilers soon made lisp machines obsolete. Workstations from companies such as Sun Microsystems offered a powerful alternative. Also, Apple and IBM have begun to build PCs that were easier to use. The turning point was 1987 when those other computers have become more powerful than the more expensive Lisp machines. The entire industry worth five hundred million U.S. dollars has been wiped out of users’ memory in a single year. Just as Minsky and Schank said.

However, Lisp decided to rise from the dead. In the 80s and 90s Lisp developers have put a great effort to unify the many dialects of Lisp in a single language. The so-called Common Lisp was indeed a compatible subset of the dialects. Although his laboriously built position was weakened by the rollout of other popular programming languages: C++ and Java. Nonetheless, Lisp is experiencing increased interest from 2000. Paradoxically, it owes its newly-gained popularity to an old medium – the book. The textbook Practical Common Lisp by Peter Seibel published in 2004 was the second most popular item on programming on Amazon. Seibel, like several other authors (a.o. well-known businessman and programmer Peter Norvig), was intrigued by the idea of popularising the language which is considered obsolete. It was a task for them as the introduction of the daily circulation of the pioneers of Hebrew in Israel. It turned out that outdated language has its advantages – it gives developers new look at programming, which makes them much more effective. As we can see, Artificial Intelligence takes strange paths.

Fear of the next winter

Now, in the early twenty-first century technology, when AI has become common, its successes are often marginalised, mainly because AI became obvious to us. Nick Bostrom said even that intelligent objects become so evident that one forgets that they are intelligent. Rodney Brooks – innovative, highly talented researcher and programmer – agrees with that. He points out that despite the general view of the fall of Artificial Intelligence, it surrounds us at every turn.

Technologies developed by Artificial Intelligence researchers have achieved commercial success in many areas, like the machine translation initially condemned to failure, data mining, industrial robotics, logistics, speech recognition and medical diagnostics. Fuzzy logic (that is, one in which the state between 0 and 1 extends to a number of intermediate values) has been harnessed to build automatic transmissions in Audi TT, VW Touareg and Skoda.

source: spiderlily-studio.webs.com

source: spiderlily-studio.webs.com

The fear of another winter gradually gave way. Some researchers have continued to voice concern that the new AI winter may come with another project too ambitious or too unrealistic promise made to the public by eminent scientists. There were, for example, fears that the robot Cog would ruin barely boosting the reputation of AI. But it did not happen. Cog was a project carried out in the Humanoid Robotics Group at MIT by already mentioned Rodney Brooks, together with multi-disciplinary group of researchers, which included well-known philosopher Daniel Dennett. Cog project was based on the assumption that the level of human intelligence requires experience gathered through contact with people. So Cog was to enter into such interactions and to learn the way infants learn. The aim of the project implementers Cog were, inter alia, (1) to design and create a humanoid face that will help the robot in establishing and maintaining social contact with people, (2) to create a robot that would be able to influence people and objects as a man, (3) to build a mechanical proprioception system, and (4) the development of complex systems of sight, hearing, touch and vocalisation. The very list of tasks shows how ambitious project Cog was! In relation to (surprise, surprise!) lack of expected results, Cog funding was cut in 2003. But it turned out that this time it was not the end of a partially successful project. Cog has built a collective imagination around the idea of something resembling a human being. Same happened with Deep Blue, which, despite some setbacks was an excellent chess champion – and that was all that mattered.

Waiting for spring

Peak of inflated expectations clashed with the deep pit of disappointment – that’s the best way to describe emotions surrounding AI. Looking at the mood swings around artificial intelligence, I gradually changed my idea about the goals of creating an intelligent machine. I have thought for a long time that the humanoid robot has to be a mirror of man. But at some point I started asking myself, why we want the robot to be more perfect than a man? Why we don’t let it fall? Why, when it falls anyway, we stubbornly try again? After all, it is not the case that in a few years technology will solve all the riddles of humanity and create an artificial human being. It will not happen soon. So, is there any other factor that pushes man to pursue his activities in this absurd field? After all, if you think about it, even the name: Artificial Intelligence, is quite grotesque. And I think to myself, the machine is not exactly a mirror, it is rather something that has to remain different, but still needs to surpass us. Thanks to the otherness of a machine human beings will feel safe. We don’t like resemblance, it’s disturbing. This is confirmed by various pop-culture visions of androids and cyborgs acquiring control of the world. Because the machine becomes better than us in things in which we don’t feel like doing and still remains quite different form us, we behave towards it as somewhat pathological parents. We challenge it, demand results, get offended by its failures and then return with a new dose of energy and expectations.

So, to make this long story short, it doesn’t matter that Aiko and Kenji get lost in the human world. They are The Others, they are weird, but they make a beautiful couple at what they do best. He hugs and she punches.